Situated Tangible Robot Programming

Y. Sefidgar, P. Agarwal, and M. Cakmak, “Situated Tangible Robot Programming,” in ACM/IEEE International Conference on Human-Robot Interaction (HRI), 2017, pp. 473–482, doi: 10.1145/2909824.3020240.

Abstract

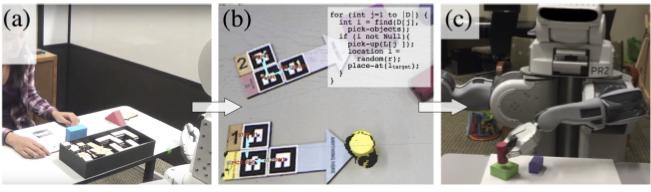

This paper introduces situated tangible robot programming, whereby a robot is programmed by placing specially designed tangible "blocks" in its workspace. These blocks are used for annotating objects, locations, or regions, and specifying actions and their ordering. The robot compiles a program by detecting blocks and objects in its workspace and grouping them into instructions by solving constraints. We present a proof-of-concept implementation using blocks with unique visual markers in a pick-and-place task domain. Three user studies evaluate the intuitiveness and learnability of situated tangible programming and iterate the block design. We characterize common challenges and gather feedback on how to further improve the design of blocks. Our studies demonstrate that people can interpret, generalize, and create many different situated tangible programs with minimal instruction or with no instruction at all.

BibTeX Entry

@inproceedings{sefidgar2017hri,

title = {Situated Tangible Robot Programming},

author = {Sefidgar, Yasaman and Agarwal, Prerna and Cakmak, Maya},

year = {2017},

booktitle = {ACM/IEEE International Conference on Human-Robot Interaction (HRI)},

publisher = {Association for Computing Machinery},

pages = {473–-482},

doi = {10.1145/2909824.3020240},

isbn = {9781450343367},

note = {Best paper award finalist},

type = {conference},

www-note = {Best paper award finalist}

}